The key ingredient in performance art of any kind is the human performer. For our Physical Computing final, Lisa Jamhoury and myself, and Danielle Butler would like to use electrical sensors and computational media to better translate the action and emotion of a performer to his or her audience. For this project the focus is for an aerial performance. We would like to draw an audience closer to an aerial performer, and to do so outside the confides of a traditional performance space. To meet these goals we will create a portable device that will take inputs from the human performer and translate them to lighting and projected visuals.

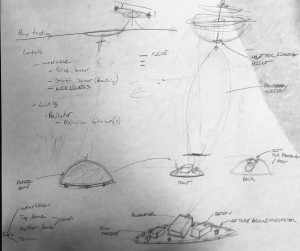

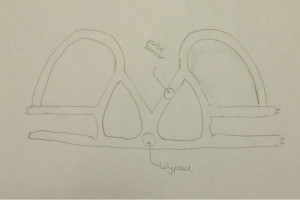

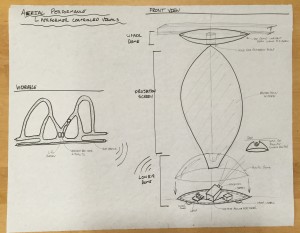

some of our original sketches:

One main priority is to only use physical inputs that will produce definite translations. I think using inputs that we can fully count on and coding them work as fluid as possible is key. (I’m not sold on the xbox kinect).

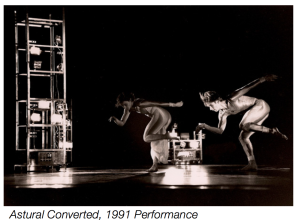

The basis of my idea of interacting with the performer comes from my work restoring a sculpture designed by Robert Rauschenberg. In 2012, I was asked by Trisha Brown Dance Company to restore the electronic set pieces for Astural Converted. The set was composed of eight wireless, aluminum framed towers. Each contains light sensor activated sound and lighting. Designed by Robert Rauschenberg in 1989 and constructed by engineers from Bell Laboratories, the towers turn off and on their lamps based on the performers movements. Each tower also plays a different part of the musical score via individual tape decks. .

Im excited about taking this idea of car headlights and tape decks controlled by a dancers movement, and scaling it up to and digital sensors and stronger visuals.

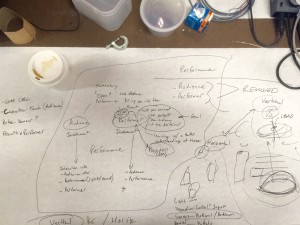

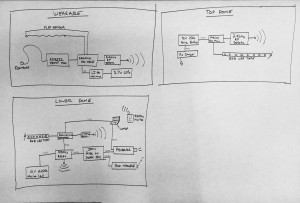

Here is our initial block diagram:

Our project will take a stretch sensor and heart rate sensor from a performer, and translate them to RGBW LED strips and projected images running from a p5 sketch.

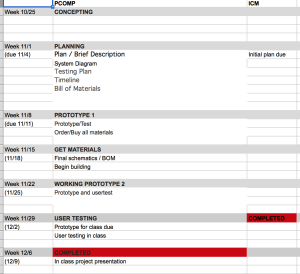

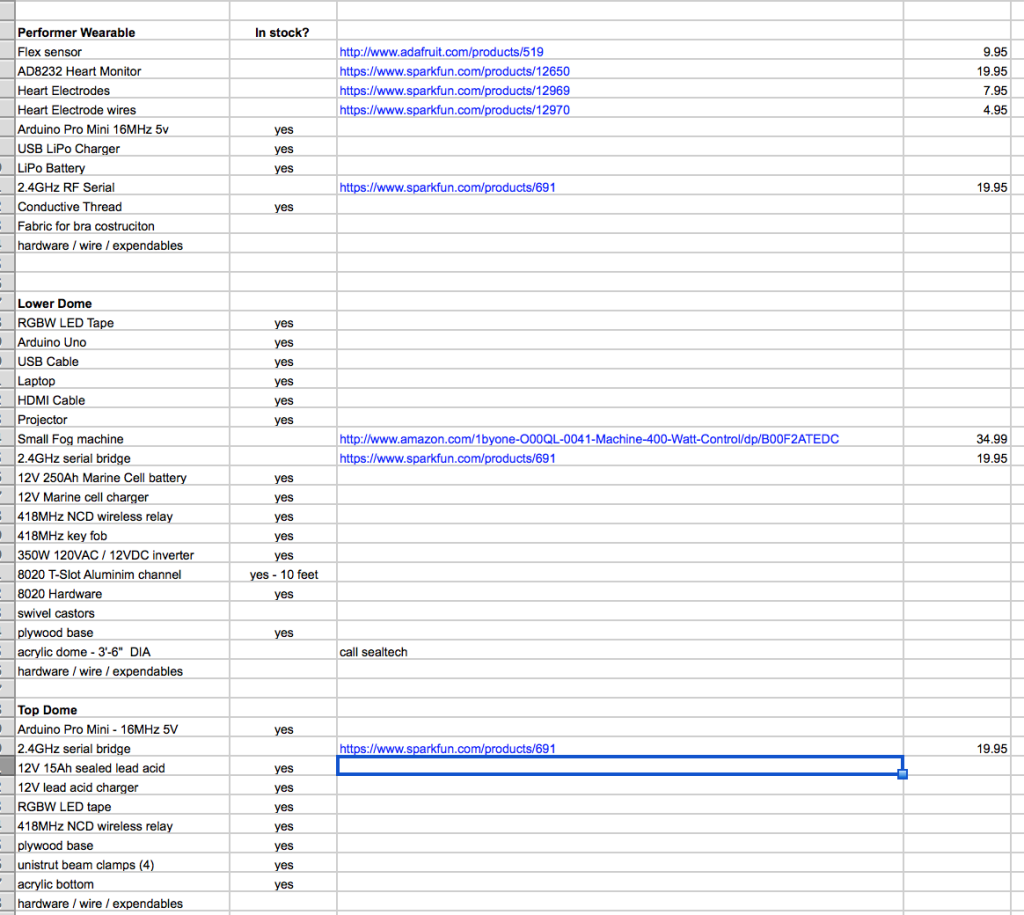

Here is our timeline and BOM so far: